Technology Explained

Unlocking Online Privacy: The Power of MixNets Explained

The Power of MixNets Explained

In an age where online privacy is a growing concern, we often turn to familiar solutions like VPNs or Tor to safeguard our digital footprint. However, there’s a new player in the arena of online anonymity – MixNets. This article delves into the intriguing world of MixNets, explaining what they are, how they work, and how they compare to the more well-known Tor and VPNs.

What Is MixNet?

MixNet is short for Mix Network, a technology designed to ensure the privacy and security of information transmitted over the internet. It achieves this by mixing data from various sources before it reaches its destination. This mixing process makes it extremely challenging for anyone to trace the origin or destination of the data.

While conventional internet data is encrypted and protected by protocols like TLS and SSL, it contains metadata that can be analyzed by outsiders. MixNets employ metadata shuffling to protect users’ privacy effectively.

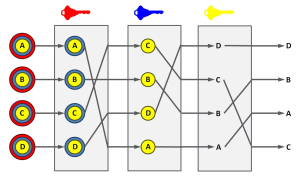

How Does a MixNet Work?

MixNets operate by implementing protocols that shuffle and mix data from multiple sources as it travels through a network of interconnected nodes. This includes mixing metadata such as geographical locations, sender and receiver IPs, message sizes, and send and receive times. The goal is to make it nearly impossible for outsiders to gain meaningful insights into users’ identities or the contents of the data.

Image by https://www.makeuseof.com/

MixNets consist of two key components:

- PKI (Public Key Infrastructure): This system distributes public key material and network connection information essential for MixNet operation. It’s crucial for the decentralized security of the network.

- Mixes: These are cryptographic routes within the mix network. They receive incoming messages, apply cryptographic transformations, and mix the data to prevent any observer from linking incoming and outgoing messages. The use of multiple independent nodes adds a layer of anonymity and collective resilience to the network.

While even one mix node can address privacy concerns, using at least three ensures added security and anonymity. Mixes break down data into bits, transform it into ciphertext, and relay it through a predefined mix cascade to its destination. Additionally, mixes introduce latency to thwart timing-based attacks.

MixNet vs. Tor

Tor, another popular technology for enhancing online privacy, employs a different approach known as onion routing. In this system, data is encrypted in layers and routed through a series of relays operated by volunteers before reaching its destination.

The relays in a Tor network serve only to encrypt the data with unique keys, without knowledge of the traffic’s origin or destination. Each layer of encryption adds complexity, making it challenging to trace the data’s path.

However, Tor relies on exit nodes, the final relays, to decrypt the last layer of encryption and send the data to its destination. This introduces a security concern if these final relays are compromised.

The choice between MixNets and Tor depends on specific requirements, including the desired level of anonymity, tolerance for latency, and network size. MixNets excel at preventing timing correlation and confirmation attacks, while Tor is effective against website fingerprinting and Sybil attacks.

MixNet vs. VPN

VPNs (Virtual Private Networks), widely adopted for online anonymity and security, create an encrypted tunnel between the user and a server. This tunnel encrypts the user’s internet traffic, hiding their personal data, location, and browsing activity, thereby preventing eavesdropping.

VPNs are suitable for scenarios where location hiding, secure public Wi-Fi connections, access to region-restricted content, and general internet privacy are needed. However, their reliance on centralized servers raises trust and privacy concerns.

On the other hand, MixNets excel in situations demanding strong anonymity and metadata protection, offering lower latency and a more decentralized architecture than VPNs. However, they may require specialized software and protocols, potentially hindering widespread adoption.

Limitations of MixNets

While MixNets offer robust privacy protection, they are not without limitations:

- Latency: The mixing process introduces delays, which can affect real-time applications.

- Network Scalability: As user and message numbers increase, managing the required mix nodes becomes more complex.

- Bandwidth Overhead: Mixing increases data packet sizes, consuming more bandwidth than direct communication.

- User Inconvenience: MixNets may require specialized software and protocols, potentially deterring users.

- Sybil Attacks: MixNets can be vulnerable to attackers creating fake nodes.

Despite these limitations, emerging technologies like HOPR and Nym are addressing these issues, offering more scalability and convenience without compromising anonymity.

Should You Use MixNets?

Whether to adopt MixNets for online privacy depends on your specific needs, tolerance for latency and bandwidth overhead, and application compatibility. MixNets are ideal if strong anonymity and non-real-time applications are your priorities. However, for user-friendly solutions or real-time communication, they may not be the best choice. Careful evaluation of their advantages and limitations is crucial before deciding if MixNets are right for you.

In conclusion, MixNets represent a promising frontier in online privacy. Understanding their strengths, weaknesses, and applications is key to making an informed decision about integrating them into your digital security strategy. As the landscape of online privacy evolves, MixNets provide an intriguing option for those who prioritize anonymity and data protection in an increasingly connected world.

Consumer Services

Right Software Development Partner in India for Your 2026 Startup

Starting a startup in 2026 feels exciting, but also confusing. You have an idea, maybe even a small team, yet one big question always appears first: who will build your product? Most founders are not programmers. They need reliable custom software development services to turn their idea into a real app, website, or platform.

India has become one of the most trusted places for startups to build software. Why? Because you get skilled engineers, global experience, and affordable pricing in one place. From fintech apps in Dubai to healthcare software used in Europe and the US, many young companies rely on Indian development partners.

In this article, we will understand how startups should choose a development partner and explore ten reliable Indian companies that provide custom software development services suitable for early-stage businesses.

How to Choose the Right Development Partner

Before hiring any company, a founder should understand what they actually need. A startup does not require a huge corporate vendor. It needs guidance and flexibility.

Here is a simple decision checklist-

- Do they build MVPs for startups or only big enterprise projects?

- Do they help with product planning or just coding?

- Do they offer cloud setup on AWS or Google Cloud?

- Can they explain technical ideas in simple English?

- Do they support your product after launch?

A good provider of custom software development services acts like a technical cofounder. They tell you what features to delay, how to save cost, and how to launch faster. For example, many fintech startups first build only login, wallet, and payment features. They add analytics later. This approach saves both time and money.

Top 10 Software Development Companies in India for Startups

Below are ten reliable companies suitable for startup founders. Each offers custom software development and flexible engagement.

1. AppSquadz

AppSquadz focuses on startup MVP development and scalable digital products. They provide mobile app development, SaaS platforms, cloud solutions, and custom software development services based on business needs. Many education and healthcare startups choose them for UI/UX guidance and post-launch support.

2. Konstant Infosolutions

Known for mobile applications, especially ecommerce and booking apps. Startups launching marketplace platforms often use their affordable software development services. They have experience with payment integrations and real time chat systems.

3. TatvaSoft

This custom application development company works with startups building web platforms and dashboards. They are strong in NET and React technologies. Several SaaS products serving the logistics industry were built by their teams.

4. PixelCrayons

Popular among international founders from the UK and Dubai. They offer dedicated developer hiring. Many early stage companies choose them when they need quick product development without hiring an internal team.

5. OpenXcell

A good option for AI and blockchain based startups. If your idea includes automation, chatbots, or analytics tools, they provide custom software development services with consulting support.

6. Radixweb

This firm helps startups scale after launch. Suppose your app suddenly gets 50000 users. They help migrate it to AWS and optimize performance. That makes them useful for growing SaaS businesses.

7. MindInventory

Strong in UI and UX design. Many founders do not realize how important user experience is. This company focuses on making apps easy to use, which improves customer retention.

8. Hidden Brains

A long established custom software development provider that works with fintech and healthcare software projects. They also build ERP systems for startups entering manufacturing.

9. Net Solutions

Useful for ecommerce startups. They have experience with Shopify and headless commerce platforms. Many D2C brands launching online stores work with them.

10. SPEC INDIA

They focus on data driven platforms and analytics dashboards. Startups handling large customer data sets or reporting tools benefit from their expertise.

Why Startups Prefer India in 2026

A few years ago startups hired freelancers. Today they prefer a custom application development company. The reason is simple: startups now build complex products. Think about apps using AI, payment gateways, cloud storage, and real time chat. One freelancer cannot manage everything. India offers three big advantages:

First, cost. A startup in the United States may spend $120000 to build an MVP. In India the same project can often be done for $15000 to $30000 using affordable software development services.

Second, cloud knowledge. Many Indian teams work daily with AWS and Google Cloud. They know how to make scalable systems. This means your app will not crash when 100 users become 10000 users.

Third, startup understanding. Indian companies now follow agile methods. They release small updates weekly instead of waiting six months.

You might ask: Is cheaper always lower quality? No. Many Indian developers build SaaS products used worldwide. Cost is lower mainly because operating expenses are lower, not because skill is lower.

Conclusion

Choosing the right development partner in 2026 is not just a technical decision — it’s a strategic one. For early-stage startups, the company you hire will directly influence your product quality, launch speed, scalability, and even investor confidence.

India continues to be a preferred destination because it offers a strong balance of cost efficiency, technical expertise, and startup-focused execution. However, the real advantage does not come from geography alone , it comes from selecting a partner who understands MVP thinking, agile development, cloud scalability, and long-term product growth.

FAQs

1. Why do startups prefer hiring Indian software development companies?

Startups prefer Indian companies because they offer a strong balance of cost efficiency, technical expertise, and scalable development practices. Many firms in India have global experience working with startups from the US, Europe, and the Middle East, making them comfortable with international standards and agile workflows.

2. How much does it cost to build an MVP in India?

The cost of building a Minimum Viable Product (MVP) in India typically ranges between $15,000 and $30,000, depending on complexity, features, and technology stack. More advanced platforms involving AI, fintech integrations, or complex dashboards may cost more.

3. How long does it take to develop a startup MVP?

Most startup MVPs take 8 to 16 weeks to develop. Simple apps with limited features can launch faster, while SaaS platforms or fintech products may require additional time for backend development, security setup, and testing.

4. Should startups hire freelancers or a development company?

Freelancers may work for very small projects, but modern startup products often require backend development, UI/UX design, cloud setup, testing, and ongoing support. A development company provides a structured team, project management, and long-term maintenance — reducing risk for early-stage founders.

5. What should startups look for before signing a development contract?

Before signing, startups should check:

- Portfolio of similar startup projects

- Clear pricing structure

- Post-launch support policy

- Ownership of source code

- Experience with cloud platforms like Amazon Web Services and Google Cloud

Digital Development

Vhsgjqm: Understanding Abstract Identifiers in the Digital Age

Costumer Services

SBCGlobal Email Not Receiving Emails: A Comprehensive Guide

Introduction

If you’re facing problems with SBCGlobal email not receiving emails, you’re not alone. Many users experience email delivery issues caused by server settings, outdated configurations, or account security errors. This guide will walk you through why your SBCGlobal email might not be receiving messages, how to fix it step-by-step, and when to contact professional suppor for advanced troubleshooting.

What Is SBCGlobal Email?

SBCGlobal.net is a legacy email service originally provided by Southwestern Bell Corporation, which later merged with AT&T. Even though new SBCGlobal accounts are no longer being created, millions of users still access their SBCGlobal email through AT&T’s Yahoo Mail platform.

However, because SBCGlobal operates on older infrastructure and server settings, users sometimes experience email syncing, login, or receiving issues especially when using third-party apps or outdated settings.

Common Reasons SBCGlobal Email Is Not Receiving Emails

Before you start troubleshooting, it’s important to identify what might be causing the issue. Below are the most frequent culprits behind SBCGlobal email receiving problems:

- Incorrect Email Settings: If your incoming (IMAP/POP3) or outgoing (SMTP) settings are incorrect, emails won’t load properly.

- Server Outages: Temporary outages or server maintenance by AT&T or Yahoo may interrupt incoming mail delivery.

- Storage Limit Reached: When your mailbox exceeds its storage limit, new emails are automatically rejected.

- Spam or Filter Rules: Overly strict filters or incorrect spam settings might send legitimate emails to the Junk or Trash folder.

- Browser Cache or App Glitches: Cached data and outdated email apps can disrupt syncing or message retrieval.

- Blocked Senders or Blacklisted IPs: Accidentally blocking a sender or being on a spam blacklist may prevent messages from reaching your inbox.

- Security or Account Lock Issues: Suspicious login attempts or password errors can cause temporary account restrictions.

Step-by-Step Solutions to Fix SBCGlobal Email Not Receiving Emails

Let’s go through a series of troubleshooting steps to help you restore your email flow. You can perform these solutions on both desktop and mobile platforms.

1. Check SBCGlobal Email Server Status

- Sometimes, the issue isn’t on your end.

- Go to Downdetector or AT&T’s official website to see if SBCGlobal or AT&T Mail is down.

If there’s an outage, you’ll need to wait until the service is restored.

2. Verify Your Internet Connection

Ensure your device has a stable and fast internet connection. Poor connectivity can stop your email client from syncing or fetching new messages.

3. Update Incoming and Outgoing Mail Server Settings

Outdated or incorrect settings are the most common reason SBCGlobal email stops receiving messages. Here are the correct configurations:

Common Reasons SBCGlobal Email Is Not Receiving Emails

Before you start troubleshooting, it’s important to identify what might be causing the issue. Below are the most frequent culprits behind SBCGlobal email receiving problems:

- Incorrect Email Settings: If your incoming (IMAP/POP3) or outgoing (SMTP) settings are incorrect, emails won’t load properly.

- Server Outages: Temporary outages or server maintenance by AT&T or Yahoo may interrupt incoming mail delivery.

- Storage Limit Reached: When your mailbox exceeds its storage limit, new emails are automatically rejected.

- Spam or Filter Rules: Overly strict filters or incorrect spam settings might send legitimate emails to the Junk or Trash folder.

- Browser Cache or App Glitches: Cached data and outdated email apps can disrupt syncing or message retrieval.

- Blocked Senders or Blacklisted IPs: Accidentally blocking a sender or being on a spam blacklist may prevent messages from reaching your inbox.

- Security or Account Lock Issues: Suspicious login attempts or password errors can cause temporary account restrictions.

Step-by-Step Solutions to Fix SBCGlobal Email Not Receiving Emails

Let’s go through a series of troubleshooting steps to help you restore your email flow. You can perform these solutions on both desktop and mobile platforms.

1. Check SBCGlobal Email Server Status

- Sometimes, the issue isn’t on your end.

- Go to Downdetector or AT&T’s official website to see if SBCGlobal or AT&T Mail is down.

If there’s an outage, you’ll need to wait until the service is restored.

2. Verify Your Internet Connection

Ensure your device has a stable and fast internet connection. Poor connectivity can stop your email client from syncing or fetching new messages.

3. Update Incoming and Outgoing Mail Server Settings

Outdated or incorrect settings are the most common reason SBCGlobal email stops receiving messages. Here are the correct configurations:

- Server:

imap.mail.att.net - Port: 993

- Encryption: SSL

- Username: Your full SBCGlobal email address

- Password: Your email password

Outgoing Mail (SMTP) Server:

- Server:

smtp.mail.att.net - Port: 465 or 587

- Encryption: SSL/TLS

- Requires Authentication: Yes

If you’re using POP3, use:

- Incoming server:

inbound.att.net, Port 995 (SSL required) - Outgoing server:

outbound.att.net, Port 465 (SSL required)

Double-check these settings in your email client (Outlook, Apple Mail, Thunderbird, etc.) to make sure they match.

4. Review Spam and Junk Folder

- Sometimes, legitimate emails end up in the Spam or Junk folder. Open these folders and mark any wrongly filtered emails as “Not Spam.”

- Also, check your Filters and Blocked Addresses under email settings to ensure no important addresses are being redirected or blocked.

5. Clear Browser Cache or Update Your App

If you access SBCGlobal email through a browser:

- Clear your cache, cookies, and browsing history.

- Try opening email in incognito/private mode to rule out extensions or ad blockers causing problems.

If you use the Yahoo Mail App or Outlook, ensure the app is updated to the latest version. Outdated apps may not sync with the latest server configurations.

6. Check Mailbox Storage Limit

- SBCGlobal email accounts have a maximum storage quota.

- Delete unnecessary emails from your inbox, sent, and trash folders.

- After clearing space, refresh your inbox or restart your email client — new emails should start appearing.

7. Reset or Re-Add Your SBCGlobal Account

- If none of the above methods work, try removing your SBCGlobal account from your email client and re-adding it with the correct settings.

- This refreshes the connection and often resolves syncing or server timeout issues.

8. Reset Your Password

If you suspect your account might have been compromised or temporarily locked, resetting your password is a smart step.

- Visit the AT&T Password Reset page.

- Follow the on-screen steps to verify your identity.

- Set a strong, unique password and re-login to your email account.

9. Disable Security Software Temporarily

- Firewall or antivirus software can sometimes block email servers.

- Temporarily disable them (only if you’re confident about your network security) and check if you start receiving emails again.

10. Contact SBCGlobal Email Support

- If you’ve followed all the steps and your SBCGlobal email is still not receiving messages, the issue might be server-side or linked to account configuration.

- In that case, it’s best to contact SBCGlobal email support for expert help.

You can reach certified technicians.

They can assist with:

- Account recovery and login errors

- Server synchronization issues

- Email migration or backup

- Advanced spam and security settings

Having professional help ensures your account is restored quickly without losing any important messages or data.

Tips to Prevent Future SBCGlobal Email Problems

- Update Passwords Regularly: Keep your email account secure and avoid login lockouts.

- Use a Reliable Email App: Apps like Outlook or Apple Mail handle IMAP connections more efficiently.

- Backup Emails Periodically: Regular backups protect your messages from unexpected sync failures.

- Keep Storage Under Control: Delete old attachments and large files frequently.

- Monitor Account Activity: Check for unusual login attempts from unknown locations.

Final Thoughts

Facing issues like SBCGlobal email not receiving emails can be frustrating, especially when you rely on your email for important communications. However, most problems can be resolved by verifying server settings, clearing browser cache, managing storage, or resetting passwords.

If you continue to face challenges, don’t hesitate to reach out to expert SBCGlobal email support for personalized assistance. A few minutes of professional troubleshooting can save hours of frustration and get your SBCGlobalemail running smoothly again.

-

Business3 years ago

Cybersecurity Consulting Company SequelNet Provides Critical IT Support Services to Medical Billing Firm, Medical Optimum

-

Business3 years ago

Team Communication Software Transforms Operations at Finance Innovate

-

Business3 years ago

Project Management Tool Transforms Long Island Business

-

Business2 years ago

How Alleviate Poverty Utilized IPPBX’s All-in-One Solution to Transform Lives in New York City

-

health3 years ago

Breast Cancer: The Imperative Role of Mammograms in Screening and Early Detection

-

Sports3 years ago

Unstoppable Collaboration: D.C.’s Citi Open and Silicon Valley Classic Unite to Propel Women’s Tennis to New Heights

-

Art /Entertainment3 years ago

Embracing Renewal: Sizdabedar Celebrations Unite Iranians in New York’s Eisenhower Park

-

Finance3 years ago

The Benefits of Starting a Side Hustle for Financial Freedom